It is well known that adjusting for one or more baseline covariates can increase statistical power in randomized controlled trials. One reason that adjusted analyses are not used more widely may be because researchers may be concerned that results may be biased if the baseline covariate(s)’ effects are not modelled correctly in the regression model for outcome. For example, a continuous baseline covariate would by default be entered linearly in a regression model, but in truth it’s effect on outcome may be non-linear. In this post we’ll review an important result which shows that for continuous outcomes modelled with linear regression, this does not matter in terms of bias – we obtain unbiased estimates of treatment effect even if we mis-specify a baseline covariate’s effect on outcome.

Setup

We’ll assume that we have data from a two arm trial on ![]() subjects. For the ith subject we record a baseline covariate

subjects. For the ith subject we record a baseline covariate ![]() and outcome

and outcome ![]() . We let

. We let ![]() denote a binary indicator of whether the subject is randomized to the new treatment group

denote a binary indicator of whether the subject is randomized to the new treatment group ![]() or the standard treatment group

or the standard treatment group ![]() . In some situations the baseline covariate may be a measurement of the same variable (e.g. blood pressure) which is being measured at follow-up by

. In some situations the baseline covariate may be a measurement of the same variable (e.g. blood pressure) which is being measured at follow-up by ![]() .

.

A linear regression model for the data is then specified as

![]()

where ![]() are errors which have expectation zero conditional on

are errors which have expectation zero conditional on ![]() and

and ![]() . The parameters (regression coefficients) are then estimated using ordinary least squares. We let

. The parameters (regression coefficients) are then estimated using ordinary least squares. We let ![]() denote the estimated treatment effect. The true treatment effect is

denote the estimated treatment effect. The true treatment effect is

![]()

Robustness to misspecification

We now ask the question: is the ordinary least square estimator ![]() unbiased for

unbiased for ![]() , even if the linear regression model assumed is not necessarily correctly specified? The answer is yes (asymptotically), and further below we outline the proof given by Yang and Tsiatis, in their 2001 paper ‘Efficiency Study of Estimators for a Treatment Effect in a Pretest-Posttest Trial’.

, even if the linear regression model assumed is not necessarily correctly specified? The answer is yes (asymptotically), and further below we outline the proof given by Yang and Tsiatis, in their 2001 paper ‘Efficiency Study of Estimators for a Treatment Effect in a Pretest-Posttest Trial’.

This means that for continuous outcomes analysed by linear regression, we do not need to worry that by potentially mis-specifying the ![]() effect we may introduce bias into the treatment effect estimator. The price of mis-specifying the effect of

effect we may introduce bias into the treatment effect estimator. The price of mis-specifying the effect of ![]() will be a reduction in efficiency. For more on methods which adaptively model this effect, see the 2008 paper by Tsiatis et al here and also Chapter 5 section 4 of Tsiatis’ book, [amazon text=&asin=0387324488&text=’Semiparametric Theory and Missing Data’]. We also note a further results from Yang and Tsiatis, that in general a more efficient estimate can be obtained by allowing for an interaction between

will be a reduction in efficiency. For more on methods which adaptively model this effect, see the 2008 paper by Tsiatis et al here and also Chapter 5 section 4 of Tsiatis’ book, [amazon text=&asin=0387324488&text=’Semiparametric Theory and Missing Data’]. We also note a further results from Yang and Tsiatis, that in general a more efficient estimate can be obtained by allowing for an interaction between ![]() and treatment

and treatment ![]() .

.

Another important point to remember is that the standard ‘model based’ standard error from linear regression assumes that the residual errors have constant variance. If this assumption doesn’t hold, it’s important to account for this in our inferences. Providing the sample size is not small, this can be achieved by using sandwich standard errors, which I covered in an earlier post here.

Simulations

To illustrate these results, we perform a small simulation study. For trials of size ![]() , we will simulate the treatment indicator

, we will simulate the treatment indicator ![]() and a baseline covariate

and a baseline covariate ![]() . We will then simulate the outcome

. We will then simulate the outcome ![]() from a linear regression model, but with linear and quadratic effects of

from a linear regression model, but with linear and quadratic effects of ![]() . The true treatment effect is set to

. The true treatment effect is set to ![]() .

.

We perform three analyses: 1) an unadjusted analysis using lm(), equivalent to a two sample t-test, 2) an adjusted analysis, including ![]() linearly, and hence mis-specifying the outcome model, and 3) the correct adjusted analysis, including both linear and quadratic effects of

linearly, and hence mis-specifying the outcome model, and 3) the correct adjusted analysis, including both linear and quadratic effects of ![]() .

.

The code is given by:

nsim <- 1000

n <- 1000

pi <- 0.5

unadjusted <- array(0, dim=nsim)

adjustedmisspec <- array(0, dim=nsim)

adjustedcorrspec <- array(0, dim=nsim)

for (sim in 1:nsim) {

z <- rbinom(n, 1, pi)

x <- rnorm(n)

y <- x+x^2+z+rnorm(n)

#analysis not adjusting for baseline

unadjustedMod <- lm(y~z)

unadjusted[sim] <- coef(unadjustedMod)[2]

#adjusted analysis misspecified

adjustedmisspecMod <- lm(y~z+x)

adjustedmisspec[sim] <- coef(adjustedmisspecMod)[2]

#adjusted correctly specified

xsq <- x^2

adjustedcorrspecMod <- lm(y~z+x+xsq)

adjustedcorrspec[sim] <- coef(adjustedcorrspecMod)[2]

}

mean(unadjusted)

mean(adjustedmisspec)

mean(adjustedcorrspec)

sd(unadjusted)

sd(adjustedmisspec)

sd(adjustedcorrspec)

Running this, I obtained (without setting the seed, you will get slightly different results):

> mean(unadjusted) [1] 0.9988225 > mean(adjustedmisspec) [1] 0.9980142 > mean(adjustedcorrspec) [1] 0.9995535 > sd(unadjusted) [1] 0.121609 > sd(adjustedmisspec) [1] 0.1090832 > sd(adjustedcorrspec) [1] 0.0639239

As expected, all three estimators are unbiased. In particular, the estimator based on a mis-specified adjustment for the baseline covariate remains unbiased, as per the theory. Moreover, we see that even with the mis-specified model, the estimator is less variable than the unadjusted estimator, corresponding to a gain in efficiency. However, we also see that a much larger efficiency gain is possible if we are able to correctly specify the effect of the baseline covariate.

Proof

The following proof is taken from a 2001 paper by Yang and Tsiatis, which can be accessed at JSTOR here. First we centre all three variables. The variables centred by their true expectations are denoted ![]() ,

, ![]() and

and ![]() . We let

. We let ![]() denote the variables centred by their sample (as opposed to population) means. After centreing, our model for the variables centred about their population means becomes

denote the variables centred by their sample (as opposed to population) means. After centreing, our model for the variables centred about their population means becomes

![]()

where now there is no intercept because of the centreing. Note also that after centreing the variables about their corresponding sample means, we can fit the model to the empirically centred variables ![]() without an intercept, and obtain the same estimates

without an intercept, and obtain the same estimates ![]() and

and ![]() as without centreing. We now let

as without centreing. We now let ![]() and

and ![]() . The OLS estimators can then be expressed as

. The OLS estimators can then be expressed as

![]()

As the sample size tends to infinity, the OLS estimators converge in probability to

![]()

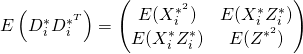

We can then derive

Then we have that since ![]() has mean zero,

has mean zero, ![]() . Since

. Since ![]() and similarly has mean zero,

and similarly has mean zero, ![]() where

where ![]() . Lastly, by randomization,

. Lastly, by randomization, ![]() and

and ![]() are statistically independent, which means that

are statistically independent, which means that ![]() . Since the off diagonal elements are zero, we then have that

. Since the off diagonal elements are zero, we then have that

![]()

To find the latter expectation we can use the law of total expectation to give

![]()

Since we can write ![]() and

and ![]() , we have that

, we have that

![]()

and so

![]()

Finally then, we have

![]()

We have thus shown that the OLS estimate of treatment effect is consistent for ![]() irrespective of whether the linearity assumption for the baseline covariate's effect on

irrespective of whether the linearity assumption for the baseline covariate's effect on ![]() is correct or not.

is correct or not.